Spack

We use the Spack package manager to provide a collection of common HPC software packages. This page explains how to use the central Spack installation to build your own modulefiles.

Table of Contents

- Quick Setup

- Guide to Using Spack

- Central Spack Installation

- Overriding Package Definitions

Quick Setup with rub-deploy-spack-configs

You can directly copy the configuration files described in Central Spack Installation (upstreams.yaml, config.yaml, modules.yaml, compilers.yaml) to your home directory using the rub-deploy-spack-configs command:

Add these lines to your ~/.bashrc to activate spack with every login:

export MODULEPATH=$MODULEPATH:$HOME/spack/share/spack/lmod/linux-almalinux9-x86_64/Core

. /cluster/spack/0.23.0/share/spack/setup-env.sh

Guide to Using Spack

Below is a detailed guide on how to effectively use Spack.

- Searching for Packages

- Viewing Package Variants

- Enabling/Disabling Variants

- Specifying Compilers

- Specifying Dependencies

- Putting It All Together

- Building and Adding a New Compiler

- Comparing Installed Package Variants

- Removing Packages

Searching for Packages

To find available packages, use:

spack list <keyword> # Search for packages by name

# Example:

spack list openfoam

openfoam openfoam-org

==> 2 packages

For detailed information about a package:

spack info <package> # Show versions, variants, and dependencies

# Example:

spack info hdf5

For a quick search for all available packages in spack, visit https://packages.spack.io/.

Viewing Package Variants

Variants are build options that enable or disable features. List them with spack info <package>:

Output includes:

Preferred version:

1.14.3 https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.14/hdf5-1.14.3/src/hdf5-1.14.3.tar.gz

Safe versions:

1.14.3 https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.14/hdf5-1.14.3/src/hdf5-1.14.3.tar.gz

1.14.2 https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.14/hdf5-1.14.2/src/hdf5-1.14.2.tar.gz

1.14.1-2 https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.14/hdf5-1.14.1-2/src/hdf5-1.14.1-2.tar.gz

1.14.0 https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.14/hdf5-1.14.0/src/hdf5-1.14.0.tar.gz

1.12.3 https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.12/hdf5-1.12.3/src/hdf5-1.12.3.tar.gz

1.12.2 https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.12/hdf5-1.12.2/src/hdf5-1.12.2.tar.gz

1.12.1 https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.12/hdf5-1.12.1/src/hdf5-1.12.1.tar.gz

1.12.0 https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.12/hdf5-1.12.0/src/hdf5-1.12.0.tar.gz

1.10.11 https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.10/hdf5-1.10.11/src/hdf5-1.10.11.tar.gz

1.10.10 https://support.hdfgroup.org/ftp/HDF5/releases/hdf5-1.10/hdf5-1.10.10/src/hdf5-1.10.10.tar.gz

Variants:

api [default] default, v110, v112, v114, v116, v16, v18

Choose api compatibility for earlier version

cxx [false] false, true

Enable C++ support

fortran [false] false, true

Enable Fortran support

hl [false] false, true

Enable the high-level library

mpi [true] false, true

Enable MPI support

Defaults are shown in square brackets, possible values to the right.

Checking the Installation

To see which dependencies will be installed, use:

Output includes:

Input spec

--------------------------------

- hdf5

Concretized

--------------------------------

[+] hdf5@1.14.3%gcc@11.4.1~cxx~fortran+hl~ipo~java~map+mpi+shared~subfiling~szip~threadsafe+tools api=default build_system=cmake build_type=Release generator=make patches=82088c8 arch=linux-almalinux9-zen4

[+] ^cmake@3.27.9%gcc@11.4.1~doc+ncurses+ownlibs build_system=generic build_type=Release arch=linux-almalinux9-zen4

[+] ^curl@8.7.1%gcc@11.4.1~gssapi~ldap~libidn2~librtmp~libssh+libssh2+nghttp2 build_system=autotools libs=shared,static tls=mbedtls,openssl arch=linux-almalinux9-zen4

[+] ^libssh2@1.11.0%gcc@11.4.1+shared build_system=autotools crypto=mbedtls patches=011d926 arch=linux-almalinux9-zen4

[+] ^xz@5.4.6%gcc@11.4.1~pic build_system=autotools libs=shared,static arch=linux-almalinux9-zen4

[+] ^mbedtls@2.28.2%gcc@11.4.1+pic build_system=makefile build_type=Release libs=shared,static arch=linux-almalinux9-zen4

[+] ^nghttp2@1.52.0%gcc@11.4.1 build_system=autotools arch=linux-almalinux9-zen4

[+] ^diffutils@3.10%gcc@11.4.1 build_system=autotools arch=linux-almalinux9-zen4

[+] ^openssl@3.3.0%gcc@11.4.1~docs+shared build_system=generic certs=mozilla arch=linux-almalinux9-zen4

[+] ^ca-certificates-mozilla@2023-05-30%gcc@11.4.1 build_system=generic arch=linux-almalinux9-zen4

[+] ^ncurses@6.5%gcc@11.4.1~symlinks+termlib abi=none build_system=autotools patches=7a351bc arch=linux-almalinux9-zen4

[+] ^gcc-runtime@11.4.1%gcc@11.4.1 build_system=generic arch=linux-almalinux9-zen4

[e] ^glibc@2.34%gcc@11.4.1 build_system=autotools arch=linux-almalinux9-zen4

[+] ^gmake@4.4.1%gcc@11.4.1~guile build_system=generic arch=linux-almalinux9-zen4

[+] ^openmpi@5.0.3%gcc@11.4.1~atomics~cuda~gpfs~internal-hwloc~internal-libevent~internal-pmix~java+legacylaunchers~lustre~memchecker~openshmem~orterunprefix~romio+rsh~static+vt+wrapper-rpath build_system=autotools fabrics=ofi romio-filesystem=none schedulers=slurm arch=linux-almalinux9-zen4

It’s always a good idea to check the specs before installing.

Enabling/Disabling Variants

Control variants with + (enable) or ~ (disable):

spack install hdf5 +mpi +cxx ~hl # Enable MPI and C++, disable high-level API

For packages with CUDA, use compute capabilities 8.0 (for GPU nodes) and 9.0 (for FatGPU nodes):

spack install openmpi +cuda cuda_arch=80,90

Specifying Compilers

Use % to specify a compiler. Check available compilers with:

Example:

spack install hdf5 %gcc@11.4.1

When using compilers other than GCC 11.4.1, dependencies must also be built with that compiler:

spack install --fresh hdf5 %gcc@13.2.0

Specifying Dependencies

Use ^ to specify dependencies with versions or variants:

spack install hdf5 ^openmpi@4.1.5

Dependencies can also have variants:

spack install hdf5 +mpi ^openmpi@4.1.5 +threads_multiple

Make sure to set the variants for the package and the dependencies on the right position or installation will fail.

Putting It All Together

Combine options for customized installations:

spack install hdf5@1.14.3 +mpi ~hl ^openmpi@4.1.5 +cuda cuda_arch=80,90 %gcc@11.4.1

- Compiles hdf 1.14.3 with GCC 11.4.1.

- Enables MPI support, disable high-level API.

- Uses OpenMPI 4.1.5 with cuda support as a dependency.

Building and Adding a New Compiler

Install a new compiler (e.g., GCC 13.2.0) with:

Add it to Spack’s compiler list:

spack compiler add $(spack location -i gcc@13.2.0)

Verify it’s recognized:

Use it to build packages:

spack install --fresh hdf5 %gcc@13.2.0

Comparing installed package variants

If you have multiple installations of the same package with different variants, you can inspect their configurations using Spack’s spec command or the find tool.

List installed packages with variants

Use spack find -vl to show all installed variants and their hashes:

spack find -vl hdf5

-- linux-almalinux9-zen4 / gcc@11.4.1 ---------------------------

amrsck6 hdf5@1.14.3~cxx~fortran+hl~ipo~java~map+mpi+shared~subfiling~szip~threadsafe+tools api=default build_system=cmake build_type=Release generator=make patches=82088c8

2dsgtoe hdf5@1.14.3+cxx+fortran~hl~ipo~java~map+mpi+shared~subfiling~szip~threadsafe+tools api=default build_system=cmake build_type=Release generator=make patches=82088c8

==> 2 installed packages

amrsck6 and 2dsgtoe are the unique hashes for each installation.- You can see one package uses

+hl while the other does not.

Inspect specific installations

Use spack spec /<hash> to view details of a specific installation:

spack spec /amrsck6

spack spec /2dsgtoe

Compare two installations

To compare variants between two installations, use spack diff with both hashes:

spack diff /amrsck6 /2dsgtoe

You will see a diff in the style of git:

--- hdf5@1.14.3/amrsck6mml43sfv4bhvvniwdydaxfgne

+++ hdf5@1.14.3/2dsgtoevoypx7dr45l5ke2dlb56agvz4

@@ virtual_on_incoming_edges @@

- openmpi mpi

+ mpich mpi

So one version depends on OpenMPI while the other depends on MPICH.

Removing Packages

For multiple variants of a package, specify the hash:

Central Spack Installation

Activate the central Spack installation with:

source /cluster/spack/0.23.0/share/spack/setup-env.sh

Use it as a starting point for your own builds without rebuilding everything from scratch.

Add these files to ~/.spack:

~/.spack/upstreams.yaml:

upstreams:

central-spack:

install_tree: /cluster/spack/opt

~/.spack/config.yaml:

config:

install_tree:

root: $HOME/spack/opt/spack

source_cache: $HOME/spack/cache

license_dir: $HOME/spack/etc/spack/licenses

~/.spack/modules.yaml:

modules:

default:

roots:

lmod: $HOME/spack/share/spack/lmod

enable: [lmod]

lmod:

all:

autoload: direct

hide_implicits: true

hierarchy: []

Add these lines to your ~/.bashrc:

export MODULEPATH=$MODULEPATH:$HOME/spack/share/spack/lmod/linux-almalinux9-x86_64/Core

. /cluster/spack/0.23.0/share/spack/setup-env.sh

Then run:

Overriding Package Definitions

Create ~/.spack/repos.yaml:

repos:

- $HOME/spack/var/spack/repos

And a local repo description in ~/spack/var/spack/repos/repo.yaml:

repo:

namespace: overrides

Copy and edit a package definition, e.g., for ffmpeg:

cd ~/spack/var/spack/repos/

mkdir -p packages/ffmpeg

cp /cluster/spack/0.23.0/var/spack/repos/builtin/packages/ffmpeg/package.py packages/ffmpeg

vim packages/ffmpeg/package.py

Alternatively, you can use a fully independent Spack installation in your home directory or opt for EasyBuild.

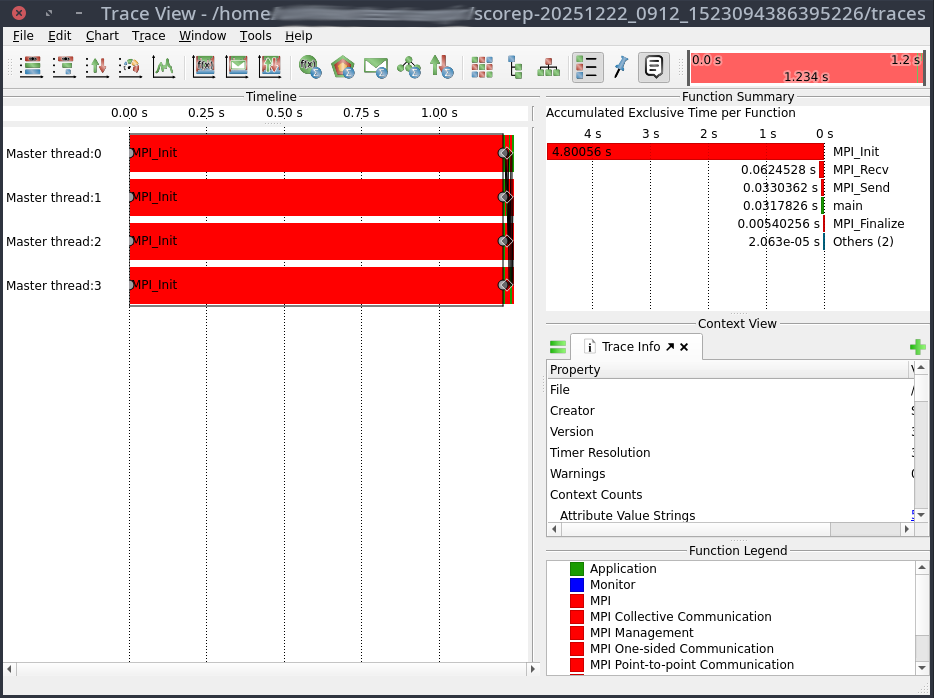

Vampir

Vampir is a framework for analyzing program behavior of serial and parallel software by utilizing function instrumentation via Score-p.

Vampir is licensed by HPC.nrw and can be used freely on the Elysium Cluster.

This site merely shows a small test case to show how Score-p can be used to generate profiling data and how Vampir can be started on Elysium.

For information how to use Vampir to analyze your application, extract useful performance metrics, and identify bottlenecks, please refer to the Score-p Cheat Sheet and the official Vampir Documentation.

Compilation with Instrumented Functions

In order to generate profiling data the function calls in the application need to be instrumented.

This means inserting additional special function calls that record the time, current call stack, and much more.

Fortunately, this is not done manually, but can easily achieved by using the Score-p compiler wrapper.

To follow along you can use this

MPI Example Code.

To use the Score-p compiler wrapper, all that is needed is to prepend the compiler by the scorep command:

module load openmpi/5.0.5-d3ii4pq

module load scorep/8.4-openmpi-5.0.5-6mtx3p6

scorep mpicc -o mpi-test.x mpi-test.c

In the case of a Makefile, or other build systems, the compiler variable has to be adjusted accordingly.

Generating Profiling Data

Profiling data is created by running the application.

Note that the profiling files can grow to enormous sizes.

Thus, it is advisable to choose a small representative test case for your application and not a full production run.

In its default mode Score-p collects profiling data by sampling the applications call-stack from time to time. In order to generate an accurate profile tracing needs to be enabled in your job script:

module load openmpi/5.0.5-d3ii4pq

module load scorep/8.4-openmpi-5.0.5-6mtx3p6

export SCOREP_ENABLE_TRACING=true

mpirun -np 4 ./mpi-test.x

Here is a full job script for the example:

#!/bin/bash

#SBATCH --partition=cpu

#SBATCH --ntasks=4

#SBATCH --nodes=1

#SBATCH --account=<Account>

#SBATCH --time=00-00:05:00

module purge

module load openmpi/5.0.5-d3ii4pq

module load scorep/8.4-openmpi-5.0.5-6mtx3p6

export SCOREP_ENABLE_TRACING=true

mpirun -np 4 ./mpi-test.x

The execution of the instrumented application will take significantly longer than usual.

Thus, it should never be used for production runs, but merely for profiling.

After the application is finished a new directory was created, containing the time stamp and some other information in its name e.g.: scorep-20251222_0912_1523094386395226

The file traces.otf2 contains the profiling data required by Vampir.

Visualizing With Vampir

In order to visualize the profiling data a

Visualization Session

has to be established.

Vampir can be started with

module load vampir

vglrun +pr -fps 20 vampir ./traces.otf2

This will open the Vampir graphical user interface:

VASP

Build configuration (MKL)

On Elysium, VASP can be built with Spack using:

spack install vasp@6.4.3 +openmp +fftlib ^openmpi@5.0.5 ^fftw@3+openmp ^intel-oneapi-mkl threads=openmp +ilp64

This configuration uses:

- Intel oneAPI MKL (ILP64) for BLAS, LAPACK and ScaLAPACK,

- VASP’s internal FFTLIB to avoid MKL CDFT issues on AMD,

- OpenMPI 5.0.5 as MPI implementation,

- OpenMP enabled for hybrid parallelisation.

We choose MKL as baseline because it is the de-facto HPC standard and performs well on AMD EPYC when AVX512 code paths are enabled.

Activating AVX512

Intel’s MKL only enables AVX512 optimisations on Intel CPUs.

On AMD, MKL defaults to AVX2/SSE code paths.

To unlock the faster AVX512 kernels on AMD EPYC we provide libfakeintel, which fakes Intel CPUID flags.

| MKL version |

library to preload |

|

| ≤ 2024.x |

/lib64/libfakeintel.so |

|

| ≥ 2025.x |

/lib64/libfakeintel2025.so |

works for older versions too |

⚠ Intel gives no guarantee that all AVX512 instructions work on AMD CPUs.

In practice, the community has shown that not every kernel uses full AVX512 width, but the overall speed-up is still substantial.

Activate AVX512 by preloading the library in your job:

export LD_PRELOAD=/lib64/libfakeintel2025.so:${LD_PRELOAD}

Test case 1 – Si256 (DFT / Hybrid HSE06)

This benchmark uses a 256-atom silicon supercell (Si256) with the HSE06 hybrid functional.

Hybrid DFT combines FFT-heavy parts with dense BLAS/LAPACK operations and is therefore a good proxy for most large-scale electronic-structure workloads.

Baseline: MPI-only, 1 node

| Configuration |

Time [s] |

Speed-up vs baseline |

| MKL (no AVX512) |

2367 |

1.00× |

| MKL (+ AVX512) |

2017 |

1.17× |

→ Always enable AVX512.

The baseline DFT case runs 17 % faster with libfakeintel,

Build configuration (AOCL)

AOCL (AMD Optimized Libraries) is AMD’s analogue to MKL, providing:

- AMDBLIS (BLAS implementation)

- AMDlibFLAME (LAPACK)

- AMDScaLAPACK, AMDFFTW optimised for AMD EPYC

- built with AOCC compiler

Build example:

spack install vasp@6.4.3 +openmp +fftlib %aocc ^amdfftw@5 ^amdblis@5 threads=openmp ^amdlibflame@5 ^amdscalapack@5 ^openmpi

AOCL detects AMD micro-architecture automatically and therefore does not require libfakeintel.

Baseline: MPI-only, 1 node

| Configuration |

Time [s] |

Speed-up vs baseline |

| MKL (+ AVX512) |

2017 |

1.00 |

| AOCL (AMD BLIS / libFLAME) |

1919 |

1.05 |

The AOCl build is another 5% faster than MKL with AVX512 enabled.

Hybrid parallelisation and NUMA domains

Each compute node has two EPYC 9254 CPUs with 24 cores each (48 total).

Each CPU is subdivided into 4 NUMA domains with separate L3 caches and memory controllers.

- MPI-only: 48 ranks per node (1 per core).

- Hybrid L3: 8 MPI ranks × 6 OpenMP threads each, bound to individual L3 domains.

This L3-hybrid layout increases memory locality, because each rank mainly uses its own local memory and avoids cross-socket traffic.

Single-node hybrid results (Si256)

| Configuration |

Time [s] |

Speed-up vs MPI-only |

| MKL (L3 hybrid) |

1936 |

1.04× |

| AOCL (L3 hybrid) |

1830 |

1.05× |

Hybrid L3 adds a modest 4-5 % speed-up.

Multi-node scaling (Si256)

| Configuration |

Nodes |

Time [s] |

Speed-up vs 1-node baseline |

| MKL MPI-only |

2 |

1305 |

1.55× |

| AOCL MPI-only |

2 |

1142 |

1.68× |

| MKL L3 hybrid |

2 |

1147 |

1.69× |

| AOCL L3 hybrid |

2 |

968 |

1.89× |

Interpretation

AOCL shows the strongest scaling across nodes; MKL’s hybrid variant catches up in scaling compared to its MPI-only counterpart.

The L3-hybrid layout maintains efficiency even in the multi-node regime.

Recommendations for DFT / Hybrid-DFT workloads

- AOCL generally outperforms MKL (+AVX512) on AMD EPYC.

- Prefer L3-Hybrid (8×6) on single-node and even multi-node jobs for FFT-heavy hybrid-DFT cases.

- For pure MPI runs, both MKL (+AVX512) and AOCL scale well; AOCL slightly better.

- Always preload libfakeintel2025.so if MKL is used.

Jobscript examples

AOCL – Hybrid L3 (8×6)

#!/bin/bash

#SBATCH -J vasp_aocl_l3hyb

#SBATCH -N 1

#SBATCH --ntasks=8

#SBATCH --cpus-per-task=6

#SBATCH -p cpu

#SBATCH -t 48:00:00

#SBATCH --exclusive

module purge

module load vasp-aocl

export OMP_NUM_THREADS=6

export OMP_PLACES=cores

export OMP_PROC_BIND=close

export BLIS_NUM_THREADS=6

mpirun -np 8 --bind-to l3 --report-bindings vasp_std

MKL (+AVX512) – Hybrid L3 (8×6)

#!/bin/bash

#SBATCH -J vasp_mkl_avx512_l3hyb

#SBATCH -N 1

#SBATCH --ntasks=8

#SBATCH --cpus-per-task=6

#SBATCH -p cpu

#SBATCH -t 48:00:00

#SBATCH --exclusive

module purge

module load vasp-mkl

export LD_PRELOAD=/lib64/libfakeintel2025.so:${LD_PRELOAD}

export OMP_NUM_THREADS=6

export OMP_PLACES=cores

export OMP_PROC_BIND=close

export MKL_NUM_THREADS=6

export MKL_DYNAMIC=FALSE

mpirun -np 8 --bind-to l3 --report-bindings vasp_std

Test case 2 – XAS (Core-level excitation)

The XAS Mn-in-ZnO case models a core-level excitation (X-ray Absorption Spectroscopy).

These workloads are not FFT-dominated; instead they involve many unoccupied bands and projector evaluations.

Single-node results (XAS)

| Configuration |

Time [s] |

Relative |

| MKL MPI-only |

897 |

1.00× |

| AOCL MPI-only |

905 |

0.99× |

| MKL L3 hybrid |

1202 |

0.75× |

| AOCL L3 hybrid |

1137 |

0.79× |

Multi-node scaling (XAS)

| Configuration |

Nodes |

Time [s] |

Relative |

| MKL MPI-only |

2 |

1333 |

0.67× |

| AOCL MPI-only |

2 |

1309 |

0.69× |

| MKL L3 hybrid |

2 |

1366 |

0.66× |

| AOCL L3 hybrid |

2 |

1351 |

0.67× |

Interpretation

For core-level / XAS calculations, hybrid OpenMP parallelisation is counter-productive, and scaling beyond one node deteriorates performance due to load imbalance and communication overhead.

Recommendations for XAS and similar workloads

- Use MPI-only and single-node configuration.

- MKL and AOCL perform identically within margin of error.

- Hybrid modes reduce efficiency and should be avoided.

- Set

OMP_NUM_THREADS=1 to avoid unwanted OpenMP activity.

General guidance

For optimal performance on Elysium with AMD EPYC processors, we recommend using the AOCL build as the default choice for all VASP workloads. AOCL consistently outperforms or matches MKL (+AVX512) across tested scenarios (e.g., 5 % faster for Si256 single-node, up to 1.89× speedup for multi-node scaling) and does not require additional configuration like libfakeintel. However, MKL remains a robust alternative, especially for users requiring compatibility with existing workflows.

| Workload type |

Characteristics |

Recommended setup |

| Hybrid DFT (HSE06, PBE0, etc.) |

FFT + dense BLAS, OpenMP beneficial |

AOCL L3 Hybrid (8×6) |

| Standard DFT (PBE, LDA) |

light BLAS, moderate FFT |

AOCL L3 Hybrid or MPI-only |

| Core-level / XAS / EELS |

many unoccupied bands, projectors |

AOCL MPI-only (single-node) |

| MD / AIMD (>100 atoms) |

large FFTs per step |

AOCL L3 Hybrid |

| Static small systems (<20 atoms) |

few bands, small matrices |

AOCL MPI-only |

Recommendations:

- Default to AOCL: Use the AOCL build for all workloads unless specific constraints (e.g., compatibility with Intel-based tools) require MKL.

- AVX512 for MKL: If using MKL, always preload

libfakeintel2025.so to enable AVX512 optimizations.

- Benchmark if unsure: Test both

MPI-only and L3 Hybrid on one node to determine the optimal configuration for your specific system.