Music Signal Processing for Cochlear Implants

Cochlear implants (CI) restore hearing abilities in profoundly hearing-impaired or deaf people by bypassing the impaired auditory periphery and stimulating electrically the auditory nerve via an array of 12 to 22 electrodes, which is inserted into the cochlea. The electric stimulation pattern is generated by an external sound processor which decomposes an incoming speech or audio signal into frequency subbands. Then, the subband envelope signals are used to modulate a series of biphasic stimulation pulses. While this procedure can restore high degrees of speech intelligibility, music perception remains poor for most CI users. In particular, severe distortions of pitch and timbre occur. This can be attributed to the low number of electrodes in current CIs, the spread of excitation in the conductive perilymph fluid within the cochlea, and the insufficient coding and transmission of temporal fine structure information not contained in the envelope signals. In contrast, CI users can access rhythmic information as well as normal-hearing listeners. Consequently, CI users prefer music composed of simple melodies and rhythmic elements.

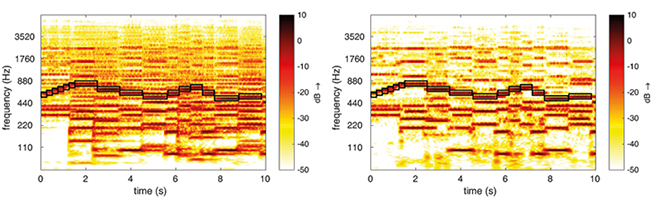

To mitigate perceived distortions of music in CI listeners, we have first developed methods for reducing the spectral complexity of classical music by applying dimensionality reduction techniques (e.g. principal component analysis, PCA) in the time-frequency domain. Figure 1 shows the spectrograms of an exemplary classical music piece (clarinet accompanied by strings) before processing (left) and after computing block-wise frequency-domain reduced-rank approximations (right). Clearly, the spectral complexity is reduced by attenuating low-power harmonics of both the accompaniment and the leading voice while retaining the most prominent harmonics of the leading voice. Listening experiments with CI users yielded statistically significant preference scores for the proposed processing methods compared to the unprocessed case. The proposed method was further extended to a binaural configuration and refined for real-time application.

Figure 1: Spectrograms before (left) and after (right) spectral complexity reduction. Markers indicate the fundamental frequency of the melody instrument.

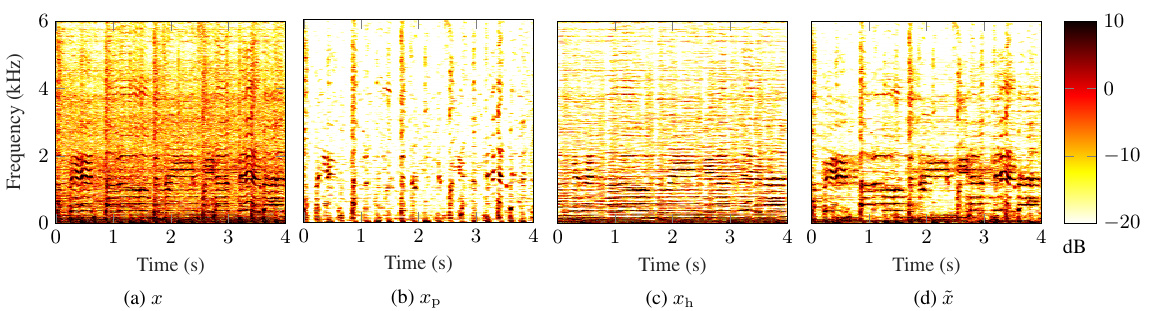

We extended this algorithm by applying a harmonic/percussive source separation (HPSS) followed by a PCA-based reduced-rank approximation of the harmonic component and a subsequent remix. This approach simplifies the spectral complexity as in the case of classical music while accentuating rhythmic information given by note onsets and drum beats. Hence, it is well-suited for enhancing popular music genres including vocals and percussive instruments as well as purely instrumental classical music. Figure 2 shows spectrograms for an examplary pop music piece before and after processing. While the harmonic components become sparser and thus easier to access, rhythmic contributions (given by wide-band transients) are preserved.

Figure 2: Spectrograms of original music signal and different processed stages for an exemplary pop music piece. (a) Original signal (b) Percussive signal part (c) Harmonic signal part (d) Proposed simplification: simplified harmonic signal and percussive signal parts

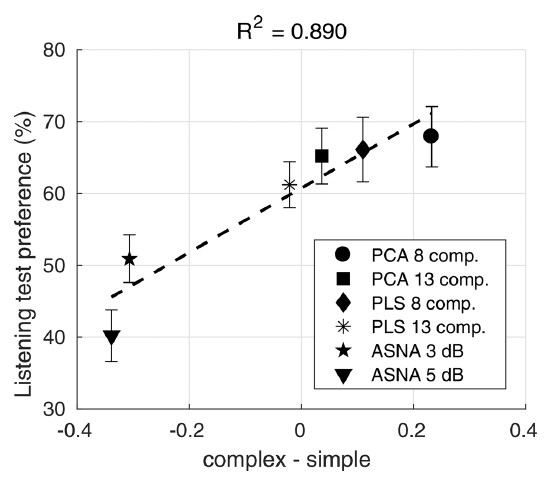

To evaluate music processing schemes for spectral complexity reduction, we also developed objective measures of perceived music complexity and auditory distortion, which have shown a high degree of consistency with the results of listening tests with CI users (see Figure 3).

Figure 3: Listening test preferences of CI users for different music processing methods vs. proposed music complexity measure (classical music). Reduced-rank approximations based on principal component analysis (PCA) and partial least-squares analysis (PLS) with 8 or 13 components were compared to a source separation (ASNA) and remix procedure with an amplification of the leading voice by 3 dB or 5 dB.

Recent work in this area is summarized in

Nogueira, W., Nagathil, A., Martin, R. (2019). "Making Music More Accessible for Cochlear Implant Listeners: Recent Developments", IEEE Signal Processing Magazine, vol. 36, no. 1, pp. 115-127, January 2019.

Selected References

Lentz, B., Nagathil, A., Gauer, J., Martin, R. (2020). "Harmonic/Percussive Sound Separation and Spectral Complexity Reduction of Music Signals for Cochlear Implant Listeners", in Proc. International Conference on Acoustics, Speech, and Signal Processing (ICASSP), pp. 8713-8717, Barcelona, Spain, May 2020

Gauer, J., Krymova, E., Belomestny, D., & Martin, R. (2019). "Spectral Complexity Reduction of Music Signals for Cochlear Implant Users based on Subspace Tracking," in Proc. European Signal Processing Conference (EUSIPCO), A Coruña, Spain, September 2-6, 2019.

Gauer, J., Nagathil, A., Martin, R., Thomas, J. P., Völter, C. (2019). "Interactive Evaluation of a Music Preprocessing Scheme for Cochlear Implants Based on Spectral Complexity Reduction", Frontiers in Neuroscience, 13, 1206.

Nagathil, A., Martin, R. (2019). "Objective Evaluation of Ideal Time-Frequency Masking for Music Complexity Reduction in Cochlear Implants", in Proc. International Symposium on Computer Music Multidisciplinary Research (CMMR), Marseille, France, October 2019.

Gauer, J., Nagathil, A., Martin, R. (2018). "Binaural spectral complexity reduction of music signals for cochlear implant listeners", in Proc. IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Calgary, Canada, April 2018.

Nagathil, A., Schlattmann, J.-W., Neumann, K., Martin, R. (2018). "Music Complexity Prediction for Cochlear Implant Listeners Based on a Feature-based Linear Regression Model", J. Acous. Soc. Am. (JASA), 144(1), pp. 1-10, July 2018.

Nagathil, A., Weihs, C., Neumann, K., Martin, R. (2017). "Spectral Complexity Reduction of Music Signals Based on Frequency-domain Reduced-rank Approximations: An Evaluation with Cochlear Implant Listeners", J. Acous. Soc. Am. (JASA), 142(3), pp. 1219-1228, September 2017.

Krymova, E., Nagathil, A., Belomestny, D., Martin, R. (2017). "Segmentation of Music Signals Based on Explained Variance Ratio for Applications in Spectral Complexity Reduction", in Proc. IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), New Orleans, USA, March 2017.

Nagathil, A., Schlattmann, J.-W., Neumann, K., Martin, R. (2017). "A Feature-based Linear Regression Model for Predicting Perceptual Ratings of Music by Cochlear Implant Listeners", in Proc. IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), New Orleans, USA, March 2017.

Nagathil, A., Weihs, C., Martin, R. (2016). "Spectral Complexity Reduction of Music Signals for Mitigating Effects of Cochlear Hearing Loss", IEEE/ACM Trans. Audio, Speech, and Language Processing, vol. 24, no. 3, pp. 445-458, March 2016

Nagathil, A., Weihs C., Martin R. (2015). "Signal Processing Strategies for Improving Music Perception in the Presence of a Cochlear Hearing Loss", in Proc. Jahrestagung der Deutschen Gesellschaft für Audiologie (DGA), Bochum, Germany, ISBN 978-3-9813141-5-1.

Schulz, K., Gauer, J., Martin R. & Völter, C. (2023) "Einfluss von Ober- und Untertönen auf die Melodieerkennung mit einem Cochlea Implantat bei SSD" (in German), submitted to Laryngo-Rhino-Otologie.